Overview

The recent development in the field of mobile robotics has made them available for commercial and research purposes. The primary challenges that are encountered by deploying the mobile robot in a dynamic environment is mapping and navigation. Simultaneous localization and mapping (SLAM) provide a good understanding of the environment for navigation and path planning. In this work, we explore the problem of mapping and navigation by incorporating the semantics of the environment. For the experimental setup, a robot (BeetleBot) is designed having equipped with Kobuki mobile base, Realsense RGB-D camera, range sensors and NVidia Jetson Xavier as computation computer. The autonomous semantic mapping and navigation are performed using RTAB-MAP with the inclusion of A* algorithm for exploring and updating the unknown environment and deep learning-based object detection algorithm. A Proportional-Integral-Derivative (PID) is implemented as a controller for the BeetleBOT. We have used the Robot Operating System (ROS) as a software development platform for the BeetleBOT. The experimental evaluation shows the mapping and localization efficacy using the BeetleBOT as our mobile robot.

Architecture

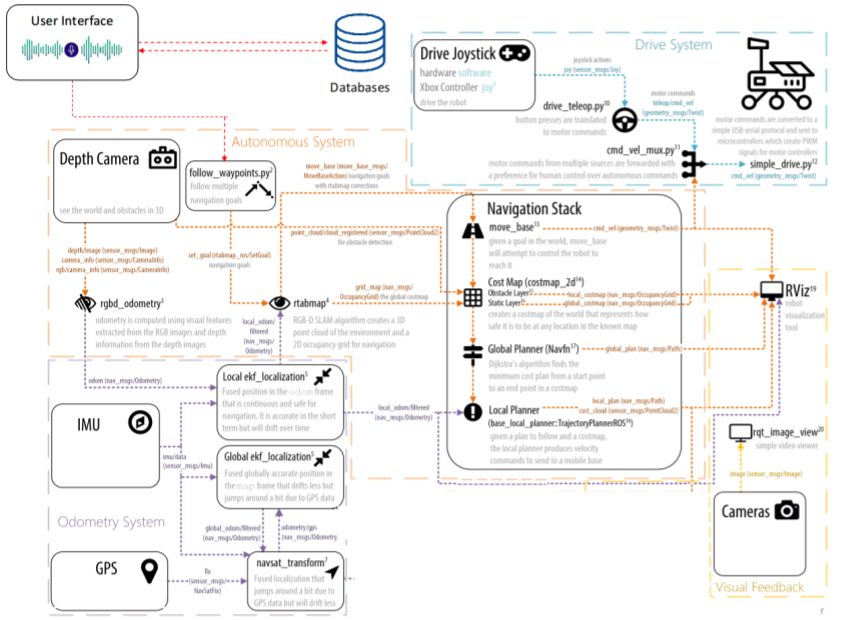

- Framework for BeetleBot

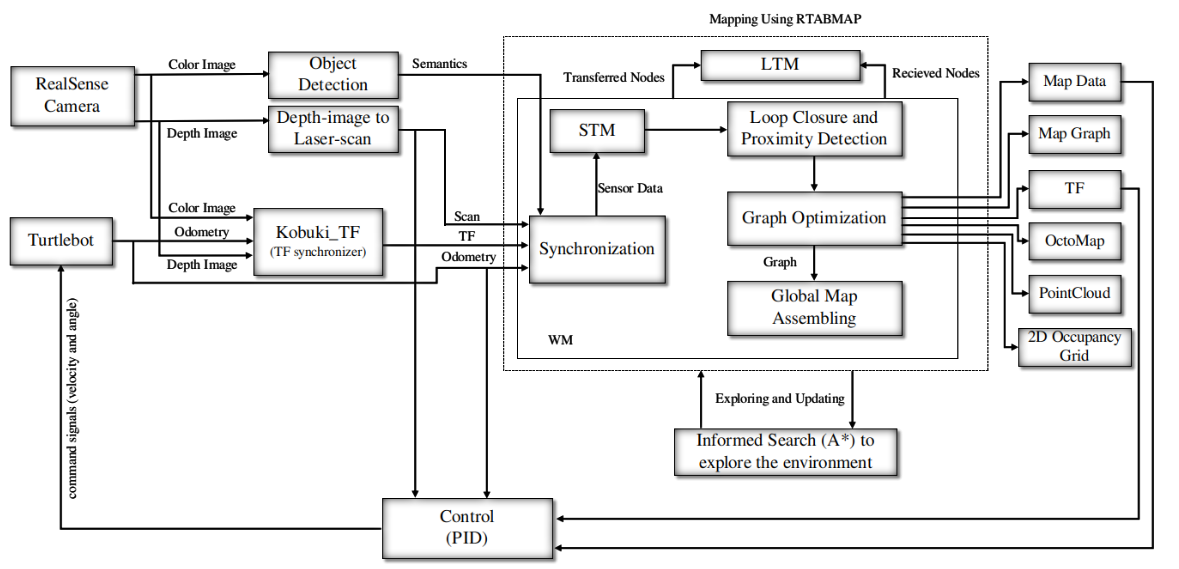

- Overall architecture of our autonomous navigation and mapping with object detection

Demonstration

- Mapping and Navigation [Watch this video]

- Obstacle Avoidance [Watch this video]